CRDTs: Conflict Resolution with SEC (Strong Eventual Consistency)

CRDT, Conflict-free Replicated Data Type, and by definition, it’s a Data Structure which can be replicated, where any of the replica can be modified without communicating with others but they receive updates once they’re back online, eventually reach to same state, deterministically, storing exactly the same data. By “deterministically” (as in automata theory), it means same state is achieved when given same input, not to be confused with idempotency"

Confusing? well, CRDTs are considered as complex topic to deal with and always receive criticism, so let’s go back and completely understand it bit by bit. Knowing consensus will help understand them better, one can assume that section below as multi-leader setup (instead of nodes), not a prerequisite though.

CRDTs in a much simpler analogy w.r.t. git, git conflicts will be automatically solved without user interference. Now that’s interesting !!

Handling Write Conflicts

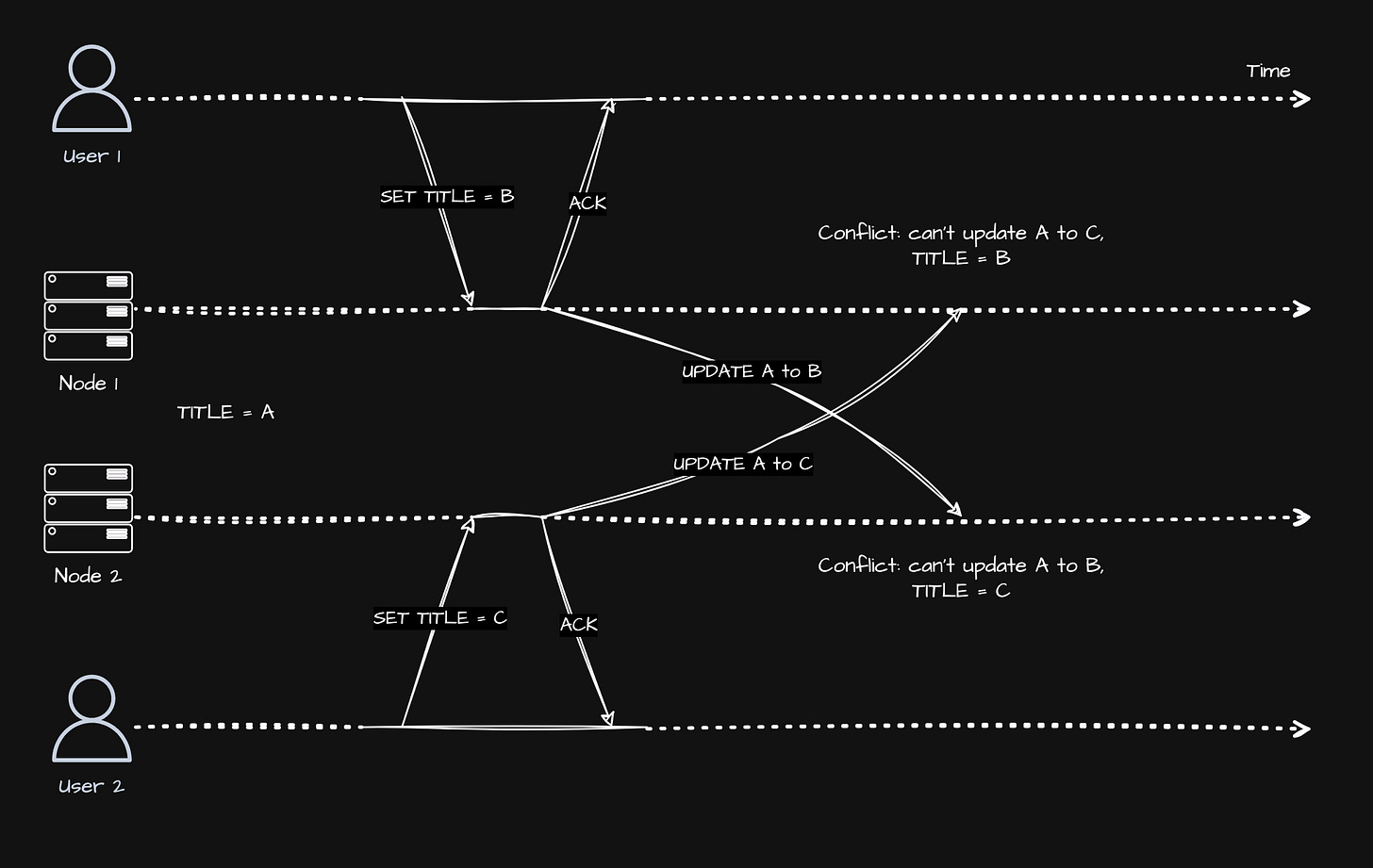

But before how are conflicts occurring? Imagine two users updating same content and pushing their updates to their local replica node, now on receiving acknowledgement that it’s updated, both the nodes will have different values. Replicas should be synced with each other at some point of time, asynchronously, now the conflict arises as both the writes show different value. Figure 1.1 for reference.

To overcome this, a lock can be maintained between them, so they can wait till a write is replicated in all other replicas, but this defeats the entire purpose of replicas working independently themselves. What if lock is not Pessimistic like Mutex or Semaphore but Optimistic, even this won’t work as dirty reads can happen when it tries to write back i.e, content we’re trying to update may not be the same when we initially read it, it could be updated before we write again.

We can purposefully ignore initial writes by LWW (Last Write Wins), but it’s not in our case as there is no definite order and if we update in the order that is seen by one replica but not the other, the entire state will become inconsistent because of time as time won’t be consistent across systems. To solve this, there are few options

Leverage same LWW by appending unique id (like timestamp or uuid), take highest id as winner, popular choice.

Prioritize replicas, need no much explanation, just pick write which originated from replica with most priority.

Above both are prone to data loss, but can be avoided by recording conflict and resolving later (possibly by user intervention, effectively beats the purpose again).

There is another way to solve this by using some custom hardcoded logic which should be ran either on write or read.

By “on write”, the logic that we gave will be ran when a conflict is detected, like say timestamp is prioritized, we already know this. By “on read”, the way CouchDB handles, all conflicted writes are stored and when we try to read it, all writes will be sent back so that user can select relevant write required.

Now that we got to know about the problem and ways around it, how to solve the problem “automatically”? Two of the popular solutions are using Operational Transformation & CRDTs.

Operational Transformation

We can take collaborative-text editing as classic example to get into details. OTs came a long way from Google (Apache) Wave (in 90s) to well-working Google Docs (was Writely, got acquired by Google), an interesting story altogether.

A former engineer for Wave and author of the ShareJS lib said that

It [Wave] never really worked. I gave a talk at the Wave Protocol Summit [..] I practiced [..] I followed literally step by step on the day and the version I made live didn’t work [..] Whatever the bugs are, I don’t think they were ever fixed in the opensource version. Its all just too complicated.

As name says, objects get transformed as different operations are being applied. OTs work on core principle of storing/logging events chronologically, following causality. Operations can be as simple as inserting/creating, updating, deleting. So every event (operation) is then passed to every other client through a centralized server, in real time. When there are concurrent edits, log is then used to transform accordingly.

The algorithm we’ve just seen is from Jupiter’s Paper which explains xform() function, i.e., transformation formula. More about foundational OTs can be found in the same paper on how xform is working by taking operations from client & server with resultant transformations.

CRDT

So we now got to know more of problem we’re trying to solve. Imagine that updates/changes, made in local client(s), are getting updated with a network round trip to a centralized server and suddenly there is a network partition at one of the clusters/nodes. Because of dependency on a centralized server, during a network drop, we won’t be able to make the changes at all. Solution that CRDTs, as a concept, proposes is, keep/log the updates locally in machine and next time when it goes online, replicate those changes using centralized server or local infra or even p2p. The order of changes need not to be same, but eventually all will have same state/structure providing convergence guarantee.

There is no explicit need of the communication layer i.e., connection to centralized server, all the time, especially when operations are being made.

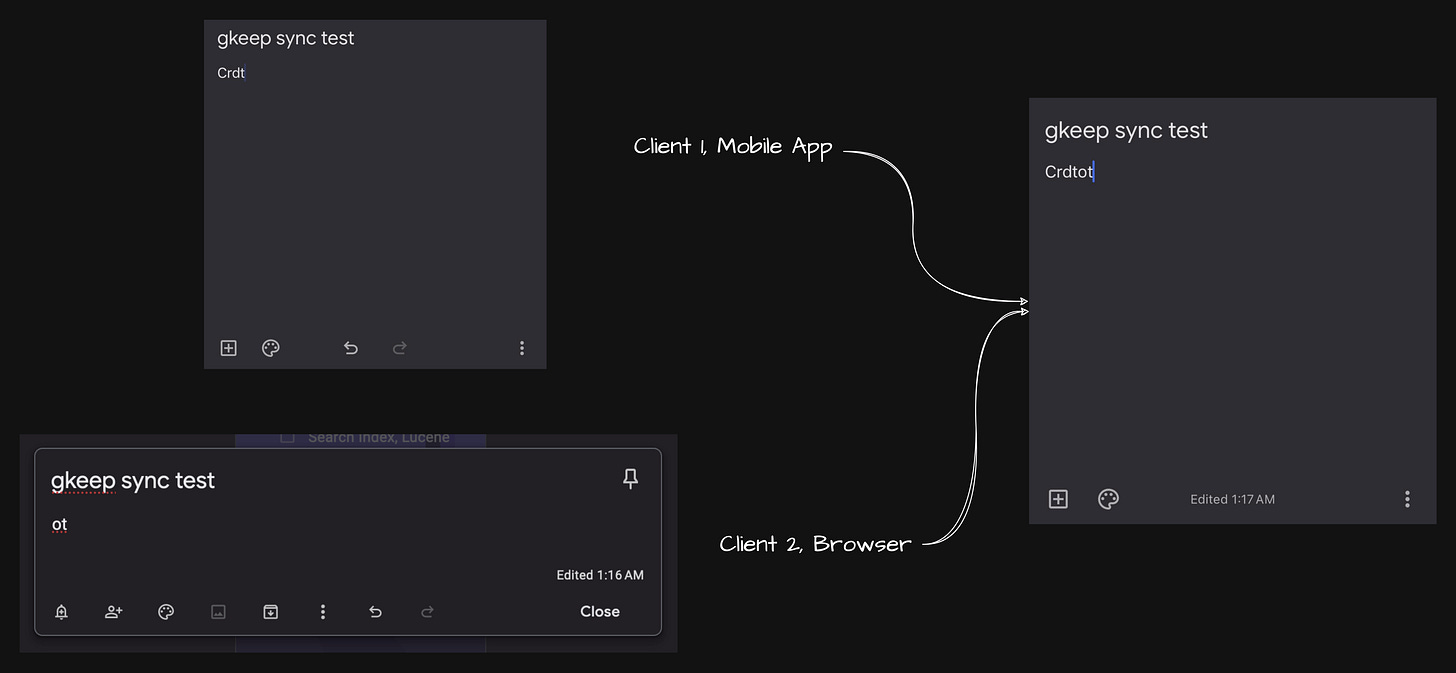

I tried to test the same with Google Keep (just illustration, might not be CRDT under the hood likely)

Created a empty document when both clients were online, turned off internet, entered Crdt in client 1 & ot in client 2, then turned on Internet. Both got synced to Crdtot. There is an ambuguity, Crdt got placed before ot but not the reverse. How they decided to keep it that way? Consensus, agreeing to pick one from a bunch of possibilities, is applied on both the op.s, given that both the operations are present at the time of selection.

Implementation

A CRDT is just a data structure, potential is only unlocked with rightful implementation. There are so many CRDTs just like there are data structures. They come in trees, graphs, lists etc. For the sake of simplicity, let’s consider strings/arrays of chars (classic collaborative text editing) and go through implementation to put that as tree (CRDT).

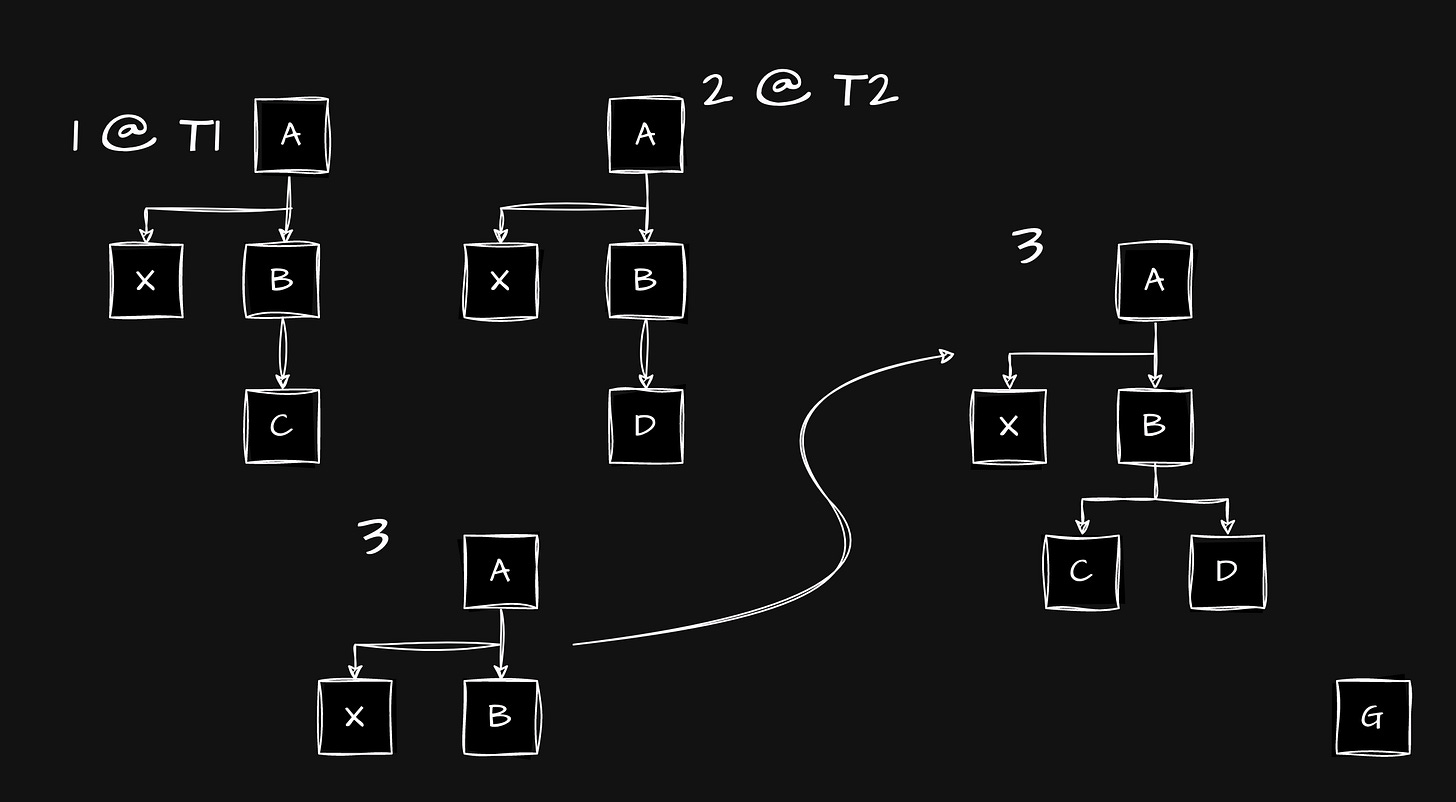

Initial state is [A, B] & there are 3 nodes, (figure 1.3 for reference). Now we want to insert X between A & B, so let’s make it as left child to A, on doing DFS (Depth First Search), traversal can be seen as [A, X, B], looks good and as expected.

Now there is a conflict, someone at first node inserted C at time T1 and someone at second node inserted D at time T2 where T2 < T1. Now LWW, T1 got precendence, so C will be the left child & D as right child. Now when we do DFS, [A, X, B, C, D].

Similarly, the tree will grow with more inserts in causal order, by doing DFS, the resultant traversal will be the state.

Similarly, there are different algorithms, to get some flavour and they can explain better than me

JSON CRDT with LWW, Replicated Growable Array (RGA)

Build the tree, connect each item to its parent.

When an item has multiple children, sort them by sequence number (which defines order of insertion, new insert will get previous insertions’ ID + 1) then by their ID.

The resulting list (or text document) can be made by flattening the tree with a depth-first traversal.

CRDTs in Y.js, Y.js Github, Y.js Internals [walk through]

Instead of implementing the CRDT as a tree like RGA does, Y.js just puts all the items in a single flat list.

But insertion becomes complex with Y.js

Finding the parent item.

Starting right after the parent item, iterate through the list until we find the location where the new item should be inserted.

Inserting it there, splicing into the array.

Yes, insertion sort.

Strong Eventual Consistency

Consistency can be divide into classes as strong, eventual and strong eventual consistencies, based on the guarantees they provide. The goal of strong consistency is to make a system behave like a single sequentially executing node, even when it is replicated and concurrent. Most systems implement strong consistency by designating a single node as the leader, which decides on a total order of operations and prevents concurrent access from causing conflicts. Used by many relational DBs like PostgreSQL.

Depending on the application, we may not need Strong Consistency, as single leader model limits performance or usage. It is not possible to have Strong Consistency when we’re heavily distributed. EC guarantees that if no new updates are made to the shared state, all nodes will eventually have the same data. Since this model allows conflicting updates to be made concurrently, it requires a mechanism for resolving such conflicts. For example, version control systems such as Git where merge conflicts need to be resolved and some NoSQL (Distributed) DBs like Cassandra adopt LWW policy, under which only one update is chosen as the winner, and concurrent updates are discarded.

Strong eventual consistency (SEC) is a model that strikes a compromise between strong and eventual consistency. Informally, it guarantees that whenever two nodes have received the same set of updates—possibly in a different order—their view of the shared state is identical and any conflicting updates are merged automatically. This exactly sums up what we’re seeing in CRDTs and how they’re SEC.

Byzantine Fault Tolerance

When a node deviates from the specified protocol, we call it Byzantine-faulty (or simply Byzantine), regardless of whether the deviation is by accident or by malice. Since it is assumed that all participating nodes correctly follow the protocol, majority of CRDT algorithms actually do not tolerate Byzantine faults. The lack of BFT can be justified by restricting to small groups of nodes, by not increasing cardinality.

Then how to make CRDTs BFT. Hashgraph, known as Merkle DAG (similar to merkle trees), also an alternate to blockchain with high performance. It’s amazing that dots are getting connected from distinct places. With that, any #nodes can be byzantine in nature, no xf + y like 3f+1, can be arbitrary. Even no quorums.

Hashgraph is the same thing that’s seen in git, IPFS. You can understand it just by going through this for a minute or two. Now that you understood how merkle tree works, applying same concept on graphs, it’s a directed acyclic graph where nodes correspond to versions of the content and edges correspond to changes (diffs in git/updates in CRDT). Each node will have hash, which comes by hashing node’s content, as it’s id.

Coming to context of “how this makes BFT”? Simple, nodes exchange updates in CRDTs but now in addition to that, they also exhange hash of that update. A malicious update by Byzantine could be, Byzantine sending different data as same update to multiple nodes. In this case, when nodes (apart from Byzantines) exchange the malicious updates, they’ll see that hash is different and discard those updates, the same is to be communicated between them. This communication happens on Gossip protocol where propagation starts at one node, nodes randomly propagate to other nodes until every node receives the message that update is malicious, need to be discarded.

More about this in these papers 1 2

Real world Applications

Meta/Facebook implemented CRDTs in their Apollo low-latency "consistency at scale" database. [HN]

Apple implements CRDTs in the Notes app for syncing offline edits between multiple devices.

Redis created a DB, which is active-active, called CRDB, which relies on CRDTs.

It offers local latency on read and write operations, regardless of the number of geo-replicated regions and their distance from each other.

It enables seamless conflict resolution (“conflict-free”) for simple and complex data types like those of Redis core.

Even if most of the geo-replicated regions in a CRDB are down, the remaining geo-replicated regions are uninterrupted and can continue to handle read and write operations, ensuring business continuity.

Riak, used as Distributed DB by Uber.

League of Legends uses the Riak CRDT implementation for its in-game chat system, which handles 7.5 million concurrent users and 11,000 messages per second.

Write-through Cache, where data first goes to cache then disk/DB, alwasy a cache hit. Data should be consistent between cache & DB, not to forget, there can be simultaneous writes happening from different clients. CRDTs can be used when there is a conflict to choose between the writes.

More from github

Types in CRDTS

Operation-based CRDT

This is based on operations, similar to OT. Also called as Commutative RDT.

Taking an example of Distributed Counter (G-Counter or PN-Counter) where eventual consistency is applied.

Grow-only Counter is a type of counter where only increments are handled and we can’t negate or make a decrement, monotonic to be specific, only increases but won’t decrease. So something likes views in YouTube.

Positive Negative-Counter consists of two G-Counters, one for increments and one for decrements, in order to get count we calculate #increments - #decrements. Most likely the implementation of upvotes and downvotes in Reddit.

This is what happens in counter at high level, going low level. There will be version vector(s) maintained at every node, enabling causality. Vectors get updated with operation which can be some function i.e., increment(NodeId id), increment function takes node’s id as argument. This same operation is propagated within all the nodes.

In order to be consistent with time in distributed systems and maintain causal order, Lamport timestamps or Vector Clocks are used. Communication channel should be reliable between nodes, there shouldn’t be any drops or dupes as operations won’t be idempotent.

State-based CRDT

So far we’ve seen how operations are moved from one to another. In state-based — also called as Convergent RDT — the whole (state of) CRDT is transferred. Converging/Merging process follows the properties which are associative, commutative & idempotent, then using Gossip protocol, which was mentioned earlier in BFT section, these updates are propagated.

Counters actually exist with state-based CRDTs also, but we can see that downside with propagating the whole structure/object when nodes grow in size. As for the converging process and properties followed by it, Distributed Sets can be discussed.

Distributed Sets, where possibilities are addition & eviction of keys, so every node will have some records in, say, two sets add & remove. To merge, we simply need to union all the records from every remove set, along with that union for every add set. Now records from remove’s union set will be removed from add’s union set, but problem comes when a record is need to be re-added. To mitigate this, we tag keys with some values likes hashes, so only some hash valued keys will be removed.

δ (Delta) state CRDT

In order to reduce the overhead of sending whole CRDT, Delta state CRDTs emerged. In this, the difference in state is passed, similar to Differential Synchronization mentioned in conclusion section.

Conclusion

CRDTs are complicated to get started with, in general, and are hardly convincing because of so much storage/memory overhead.

If temporary inconsistencies can be looked over, using eventual consistency is better generally.

Not saying that OTs are better than CRDTs or vice versa, there are always trade-offs. There is also Differential Synchronization from Google (paper, tech-talk).

What we’ve seen so far is just tip of iceberg, there’s more to explore. Keeping up with HN comments for CRDTs, to see what’s happening rn and to get more use cases/ideas.

If you find any inaccuracies or omissions, please leave a comment and I will try to fix them. Diagrams drawn using draw.io.

References & Further Reading

Multi Leader Replication : Handling Write Conflicts (Part II Distributed Data, Chapter 5 Replication) in Designing Data-Intensive Applications (DDIA) by Martin Kleppmann

crdt.tech, alangibson/awesome-crdt

Conferences/Talks on CRDTs by Martin Kleppmann (thanks!)

Verifying Strong Eventual Consistency in Distributed Systems

Making CRDTs Byzantine Fault Tolerant

Introduction of Tombstones in CRDT